This post is in response to the discussion around my recent Easy Tier performance post.

Now updated to include SPC-1/E results (now that I realise it is the same workload as SPC-1 as highlighted by Dimitris).

SPC-1 results can be seen at http://www.storageperformance.org

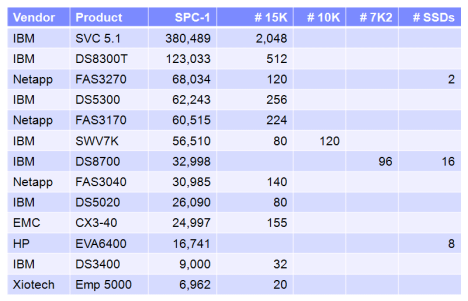

Not all of these are latest models, but it might give some small insight into the performance of the various architectures. The Storwize V7000 is limited to 120 internal drives until March 2011, so the SPC-1 was submitted with an external DS5020 attached to provide extra drives. The DS8700 result shows the effects of Easy Tier with SATA RAID10.

Filed under: DS8000, N Series, SAN Volume Controller, Storwize V7000 |

you forgot 3270, 68K IOPS, 120 drives, RAID-DP… :)

LikeLike

Please mention that the SVC 380 kIOPS was achieved with only 4 nodes. the SVC will be able to run on nearly the double performance with an 8 node cluster.

LikeLike

and over 2000 drives in RAID10 – do the math IOPS/drive, wasn’t that good. The whole point is to be able to get good efficiency out of reasonable configs.

LikeLike

Hello Dimitris,

probably better to think the other way round!

We needed these 2000 disks to saturate the 4 Node SVC. The 6 Node SVC was not really in trouble with these 2000 Disks

I do not know any other product that can handle the same high IO.

LikeLike

No EMC boxes (the one mentioned was provided by NetApp). :)

Anyway, I don’t believe much in syntethic benchmarks. The SPC-1 result is heavily dependant of the spindle count. You should look at the IOPS/$ value, this could be much better comparison parameter.

LikeLike

actually I disagree – highly intelligent controllers can get far more IOPS/disk with SPC-1 vs less intelligent ones (look at the 3270 result).

LikeLike

SPC-1 is still highly dependant on drive count, speed and technology. Check out the results for yourself. IBM DS8300 with 512x 15x drives has more SPC-1 IOPS than IBM DS8700 with 16x SSD + 96x SATA. How yes no. :)

Unfortunately, this is comparing apples to oranges. It might be the same workload, but it highly depends on too many different parameters.

You can’t use these results as a typical benchmark results where appliances are compared by speed. This can’t be compared in any way. That’s why I said that it would be much better to compare price per IOPS.

LikeLike

I agree that $/IOPS would be a much fairer comparison. Array vendor prices vary so much, that it really is eye opening how much some large blue vendors charge versus others.

LikeLike

NetApp can build also do storage grid (GX in the past, now it’s called ontap 8 cluster-mode).

The can put multiple controllers in one big cluster.

LikeLike