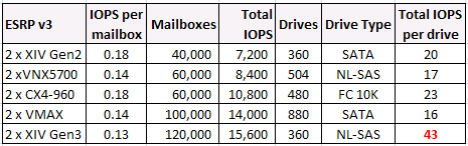

So here’s a quick comparison of XIV Gen3 and Gen2 with some competitors. Note that ESRP is designed to be more of a proof of concept than a benchmark, but it has a performance component which is relevant. Exchange 2010 has reduced disk I/O over Exchange 2007 which has allowed vendors to switch to using 7200 RPM drives for the testing.

The ESRP reports are actually quite confusing to read since they test a fail-over situation so require two disk systems, but some of the info in them relates to a single disk system. I have chosen to include both machines in everything for consistency. The XIV report may not be up on the website for a few days.

Once again XIV demonstrates its uniqueness in not being a just another drive-dominated architecture. Performance on XIV is about intelligent use of distributed grid caches:

- XIV Gen 3 returns 2.5 times the IOPS from a NL-SAS drive that a VNX5700 does.

- XIV Gen 3 returns 1.8 times from NL-SAS 7200RPM what a CX4 can get out of FC 10KRPM drives.

- Even XIV Gen2 with SATA drives can get 25% more IOPS per SATA drive than VMAX.

And to answer a question asked on my earlier post. No these XIV results do not include SSD drives, although the XIV is now SSD-ready and IBM has issued a statement of direction saying that up to 7.5TB of PCIe-SSD cache is planned for 1H 2012. Maybe that’s 15 x 500GB SSDs (one per grid node).

Filed under: XIV |

Thanks for taking the effort in comparing the ESRP reports. Two more columns in your chart may be useful to readers (you may have these already, but the chart is truncated in the article on my browser), those being average database disk read/write latency and also Storage Capacity Utilisation.

Can you or any other readers comment on why EMC chose to use so many drives on the VMAX configuration with a Storage capacity utilisation of 45% (as shown in their ESRP report)?

Do you reckon we will see SPC results for XIV Gen3?

LikeLike

It would be interesting to see a $$ per mailbox comparison – given that e.g. the VNX’s would be less than $250k each and the XiV’s around a cool mil each. On the other hand, the VNX’s could be upsized and populated with EFD’s and for still less than a mil each we would see (fanfare & applause) 497,000 IOPS @ 3.3 ms response time (cf the official SPEC sfs2008 results published Feb 2011). So – let me think now… 7,800 IOPS from 1 x Gen3 XiV versus 497,000 IOPS from one VNX – for about the same mulah – about 63 x more bang for buck from EMC. Am I missing something here???

(Note – this contributor’s comments relate to his own personal views and may or may not reflect the views of his employer)

LikeLike

Eugene, your fast-and-loose price speculation doesn’t add anything to the debate.

I note you made no mention of VMAX. I could have included Storwize V7000, which in many ways is a more direct comparison to both VMAX and VNX, but this post was a sampler of XIV performance on MS Exchange.

It seems bizarre to me that you would try to use an EMC NAS SPECsfs benchmark that uses 436 flash drives as a comparison point for this ESRP. I only hope you don’t charge your customers for advice like that!

LikeLike

Another interesting point is that the SPEC sfs results are measured in Operations Per Second … not Input Output Operations Per second (IOPS) which are generally measured against a fixed workload size consisting of 4 or 8K blocks in a pre-determined mix of reads and writes.

This is important because SPEC sfs operations measures network filesystem performance which includes a majority of metadata operations, from memory only about 30% of the operations actually involve reading or writing user data. As a result that benchmark actually stresses the CPU of the storage controller providing the filesystem functionality more than it does the actual disk I/O and RAID subsystems. Making SPEC fly is more about how much CPU you can throw at the workload rather than how much disk/cache. EMC did admirably on that measure, but even so, you simply cant use a SPEC sfs figure and compare is against an exchange MSRP in any kind of meaningful or relevant way.

For those purposes SPC-1 is closer to a mail processing workload, but even then its only passably comparable to exchange 2007, and that bares litte relationship to the workload characteristics of exchange 2010

43 IOPS per SATA/NL-SAS drive is an excellent result, though I’m curious exactly how big the working set size was and how much cache was available to absorb the reads (might have to read the whole ESRP). Though having said that as much as I disagree with some of the statements made above from the EMC fan, if as he implies it costs you a million extra dollars in controller hardware to save a few hundred thousand in disk, then that would seem to be a false economy.

Regards

John

LikeLike