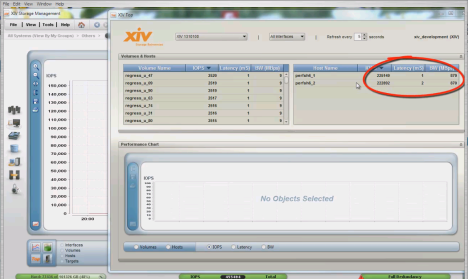

Don’t try this at home on your production systems… but it’s nice to see the XIV flying at 455 thousand IOPS. It actually peaked above 460K on this lab test but what’s 5,000 IOPS here or there…

Thanks to Mert Baki

Filed under: XIV |

Don’t try this at home on your production systems… but it’s nice to see the XIV flying at 455 thousand IOPS. It actually peaked above 460K on this lab test but what’s 5,000 IOPS here or there…

Thanks to Mert Baki

Filed under: XIV |

| Double Drive Failure… on XIV Drive Management | |

| PatrickWab on IBM’s New Midrange with… | |

| Build Your Own Scala… on IBM’s Scale-out FlashSys… | |

| Vern Bastable on FCIP Routers – A Best Pr… | |

| The Simple Truths… on IBM Storwize 7.2 wins by a… | |

| Eric_Ozz on Comprestimator Guesstimator |

Create a free website or blog at WordPress.com. WP Designer.

is that a single frame?

LikeLike

Must be – we don’t do multi-frame… yet : )

LikeLike

Very impressive.

Is this 4KB IO with 100% module cache hits?

Your link to the MP4 file does not appear to be accessible.

LikeLike

I’m guessing you’re right with the cache hits. Sorry about the link. I didn’t realise – not only was it an internal link, but it was missing a : as in the http:/

Link now deleted.

LikeLike

XIV Gen 3 has impressed me to the point where I’m revising my outlook. If Cache databases can become qualified, it will definitely change my opinion.

The question I have, is 55 TB too much to ask for the increased performance?

LikeLike

Hi all, D from NetApp here…

Indeed, such performance is all cache hits, and with Infiniband it can scale higher with lower latency. However, at the end of the day, with cache misses you still have to revert to 180 SATA drives.

If IBM puts in 15K drives the thing will probably work 3x faster with cache misses, at a lower capacity point of course, but it may be OK.

I’m curious to see what the SSD caching will look like. How many TB cache max, Jim?

Thx

D

LikeLike

This architecture is designed to be as independent of spindle count as possible. With fifteen distributed caches of 24GB each looking after only 12 drives, and fifteen quad core CPUs each doing cache planning for only 12 drives, and fifteen PCI and SAS buses each moving data to and from cache for only 12 drives. Nett result is that you can be far smarter about cache, and move a lot of data preemptively, without choking the pipes. Traditional architectures would choke if they tried to do the same thing to a big centralized cache.

LikeLike

Can’t really say more about the SSD layer other than what has been said in the official statement of direction.

“IBM XIV Storage System Gen3 will be upgradeable to an SSD Caching option which is planned for the first half of 2012. The SSD caching option is being designed to provide up to 7.5 TB of fast read cache to support and up to a 90% reduction in I/O latency for random read workloads.”

http://bit.ly/pak5be

But can i risk saying that the SSD fun won’t stop there…

LikeLike

When trying to understand XIV, people mostly struggle to move their thinking away from a traditional funnel cache architecture, so they apply assumptions that are simply not valid.

As an example of how architectural differences need to be taken into account, from your Netapp world, think of the way WAFL and NVRAM work together in a FAS. Someone might say that on a FAS you can only get the same number of RAID6 random writes per disk drive as a VNX – that’s just disk drive mechanics they might say, and that sounds like a fair enough comment on the surface.

However, it fails to take take into account that NVRAM/WAFL excels at coalescing random writes into full stripe writes – if you can convert random I/O into full stripe I/O then you can avoid extra parity I/Os and thereby get more data write I/Os per drive.

So that’s just an example of why you can’t always apply generic rules to different architectures. You can’t apply simple disk drive maths to XIV – it’s a distributed cache grid – the drives are less important in XIV than in any other disk system ever invented.

LikeLike

I’m a long time NetApp guy who finds himself in a shop with XIV and NetApp. I’ve got to say the simplicty of XIV and the ability to hit higher read rates with out PAM is pretty awsome. I guess the new SSD for XIV will really compare to PAM.

As for CACHE, ironically we have an instance running on a vFiler backed by a gen2 XIV. It’s not a production implementation but performs quite well.

LikeLike

Any test using IBM i + VIOS? IBM i workloads are very sensitive to cache, but also to disk speed, because of I/O features.

Let me express better: If I have an IBM i with 80 15K rpm U320 disks, using 1GB cache each, can I replace them with IBM XIV G3?

LikeLike

The answer is maybe yes, but IBM i internal storage is pretty easy and VIOS adds an extra thing to think about, so you’d have to have a reason for doing that. Also 80 drives maybe implies less than 20TiB capacity, so if you do go external then Storwize V7000 might be more practical/economical for a site that size.

LikeLike

this 100% cache it, look at the latency! you can not get that results in “real” OLTP workloads. Let me see the backend performance, with those SAS interfaced “SATA” disks, it will suffer. In short, this does not mean anything, you can even see million IOPS if you could a terabyte of memory into that…. thanks…

LikeLike

[…] Cache has really good write performance (remember the screenshot from July last year showing almost 500,000 IOPS 4KB write hits at around 1 second latency?) and now with Flash Cache the read performance gets to […]

LikeLike