Please note that my more recent blog articles are now being posted on LinkedIn

https://www.linkedin.com/in/jimkellynz/detail/recent-activity/posts/

Filed under: Cloud | Leave a comment »

Please note that my more recent blog articles are now being posted on LinkedIn

https://www.linkedin.com/in/jimkellynz/detail/recent-activity/posts/

Filed under: Cloud | Leave a comment »

Shared Nothing or Loosely Coupled?

https://www.linkedin.com/pulse/shared-nothing-loosely-coupled-jim-kelly

Filed under: Uncategorized | Leave a comment »

S3 on-premises for your devs @<$10K

https://www.linkedin.com/pulse/s3-on-premises-your-devs-10k-jim-kelly

Filed under: Uncategorized | Leave a comment »

Four Ideas to Modernize your Network Strategy

https://www.linkedin.com/pulse/four-ideas-modernize-your-network-strategy-jim-kelly

Filed under: Uncategorized | Leave a comment »

I’ve written before about object storage and scale-out software-defined storage. These seem to be ideas whose time has come, but I have also learned that the economics of these solutions need to be examined closely.

If you look to buy high function storage software, with per TB licensing, and premium support, on premium Intel servers with premium support, then my experience is that you have just cornered yourself into old-school economics. I have made this mistake before. Great solution, lousy economics. This is not what Facebook or Google does, by the way.

If you’re going to insist on premium-on-premium then, unless you have very specific drivers for SDS, or extremely large scale, you might be better to go and buy an integrated storage-controller-plus-expansion-trays solution from a storage hardware vendor (and make sure it’s one that doesn’t charge per TB).

With workloads such as analytics and disk-to-disk backups, we are not dealing with transactional systems of record and we should not be applying old-school economics to the solutions. Well managed risk should be in proportion to the critical availability requirements of the data. Which brings me to Open Source.

Open Source software has sometimes meant complexity and poorly tested features and bugs that require workarounds but the variety, maturity and general usability of Open Source storage software has been steadily improving, and feature/bug risks can be managed. The pay-off is software at $0 per usable TB instead of US$1,500 or US$2,000 per usable TB (seriously folks, I’m not just making these vendor prices up).

It should be noted that open source software without vendor support is not the same as unsupported. Community support is at the heart of the Open Source movement. There are also some Open Source storage software solutions that offer an option for full support, so you have choice about how far you want to go.

It’s taken us a while to work out that we can and should be doing all of this, rather than always seeking the most elegant solution, or the one that comes most highly recommended by Gartner, or the one that has the largest market share, or the newest thing from our favorite big vendors.

It’s not always easy and a big part of the success is making sure we can contain the costs of the underlying hardware. Documentation and quoting and design are all considerably harder in this world, because you’re going to have to work out a bunch of this for yourself. Most integrators just don’t have the patience or skill to make it happen reliably, but those that do can deliver significant benefits to their customers.

Right now we’re working solutions based on S3 or iSCSI or NFS scale out storage with options for community or full support. Ideal use cases are analytics, backup target storage, migration off AWS S3 to on-premises to save cost, and test/dev environments for those who are deploying to Amazon S3, but I’m sure you can think of others.

Filed under: Cloud, GPFS, Hyperconverged Storage, VMware | Leave a comment »

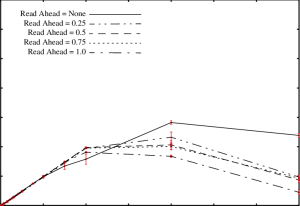

Just a short one to relate an experience and sound a warning about the wonderful modern invention of read ahead cache.

Let me start by quoting an arstechnica post from 2010:

I have this long-running job (i.e. running for MONTHS) which happens to be I/O-bound. I have 8 threads, each of which sequentially reads from an 80GB file, loads it into a specialized database, and then moves on to the next 80GB file. The machine has four CPUs, but the concurrency level was chosen empirically to get the maximum I/O throughput.

Today I was pondering how I could make this job finish before I die, and after some googling around I found you can jack up Linux’s read-ahead buffers to improve sequential reads. Basically it makes the kernel seek less, and slurp in more data before it moves on to the next operation. This is a good trade if you have tons of free memory.

Well, needless to say I was shocked at the improvement this brings. I set the readahead from the default of 256 (== 128KiB) to 65536 (== 32MiB) and the IO jumped way, way up. According to sar, in the ten-minute period before I made the change the input rate was 39.3MiB/s. In the first ten minute falling entirely after I made the change, the input rate was 90.0MiB/s. Output rate (to the database) leaped from 6MiB/s to 20MiB/s. CPU iowait% dropped from 49% to 0% , idle% dropped from 13% to 0%, and user% jumped from 37% to 97%.

In other words, this one simple command changed my workload from IO-bound to CPU-bound. I am using RHEL5, Linux 2.6.18.

blockdev --setra 65536 /dev/md0

Sounds great!

Why not make that the default setting for everything?

So here’s why not.

Without going into customer specific details I can tell you that right here in 2017 some workloads are very random, and truly random reads benefit very little from read ahead cache. In fact what can happen is that the storage just gets jammed up feeding data to the read ahead cache. If every 128 KiB random read gets translated into a 32 MiB read ahead and you start hitting high I/O rates then you can expect latency to go through the roof, and no amount of tuning at the storage end is going to help you.

So, if you’re diagnosing latency problems on a heavy random read workload, remember to ask your server admins about their read ahead settings.

Filed under: Uncategorized | Leave a comment »

Hey folks, just a quick post for you based on recent experience of IBM’s NAS Comprestimator utility for Storwize V7000 Unified where it completely failed to predict an outcome that I had personally predicted 100% accurately, based on common sense. The lesson here is that you should read the NAS Comprestimator documentation very carefully before you trust it (and once you read and understand it you’ll realize that there are some situations in which you simply cannot trust it).

We all know that Comprestimator is a sampling tool right? It looks at your actual data and works out the compression ratio you’re likely to get… well, kind of…

Let’s look first at the latest IBM spiel at https://www-304.ibm.com/webapp/set2/sas/f/comprestimator/home.html

“The Comprestimator utility uses advanced mathematical and statistical algorithms to perform the sampling and analysis process in a very short and efficient way.”

Cool, advanced mathematical and statistical algorithms – sounds great!

But there’s a slightly different story told on an older page that is somewhat more revealing http://m.ibm.com/http/www14.software.ibm.com/webapp/set2/sas/f/comprestimator/NAS_Compression_estimation_utility.html

“The NAS Compression Estimation Utility performs a very efficient and quick listing of file directories. The utility analyzes file-type distribution information in the scanned directories, and uses a pre-defined list of expected compression rates per filename extension. After completing the directory listing step the utility generates a spreadsheet report showing estimated compression savings per each file-type scanned and the total savings expected in the environment.

It is important to understand that this utility provides a rough estimation based on typical compression rates achieved for the file-types scanned in other customer and lab environments. Since data contained in files is diverse and is different between users and applications storing the data, actual compression achieved will vary between environments. This utility provides a rough estimation of expected compression savings rather than an accurate prediction.“

The difference here is that one is for NAS and one is for block, but I’m assuming that the underlying tool is the same. So, what if you have a whole lot of files with no extension? Apparently Comprestimator then just assumes 50% compression.

Below I reveal the reverse-engineered source code for the NAS Comprestimator when it comes to assessing files with no extension, and I release this under an Apache licence. Live Free or Die people.

#include<stdio.h>

int main()

{

printf(“IBM advanced mathematical and statistical algorithms predict the following compression ratio: 50% \n”);

return 0;

}

enjoy : )

Filed under: Commvault, GPFS, storage, Storwize V7000 | 1 Comment »

… Here I am stuck in the middle with you (with apologies to Stealers Wheel)

Is Cloud forking? Lean mean Containers on the one hand, and fat rich Platform-as-a-Service on the other? Check out my latest blog post here and find out.

Filed under: Cloud | Leave a comment »

Check out my latest blog post at http://hubs.ly/H02PJ4r0

Filed under: Cloud, GPFS, N Series, Nimble, SONAS, storage | Leave a comment »

Tempted as I am to start explaining what containers are and why they make sense, I will resist that urge and assume for now you have all realised that they are a big part of our future whether that be on-premises or Public Cloud-based.

Containers are going to bring as much change to Enterprise IT as virtualization did back in the day, and knowing how to do it well is vital to success.

ViFX is bringing Ben Corrie, Containers super-guru, to New Zealand to help get the revolution moving.

Ben blogged about the potential for containers back in June 2015. Click on his photo for a quick recap:

Register now to hear one of the key architects of change in our industry speak in Auckland and Wellington in April, along with deep dive and demos in a 3 hour session. I would suggest to those further afield that this is also worth flying in from Australia, Christchurch etc.

~

And since it’s been a while since I finished a post with a link to youtube, here is The Fall doing “Container Drivers“.

Filed under: Cloud, VMware | Leave a comment »

As a follow-up to my earlier post comparing Object Storage to Block Storage, here’s a quick infographic to remind you of some of the key differences.

Object storage infographic 160321

Filed under: Uncategorized | Leave a comment »

Object Storage that is…

By now most of us have heard of Object Storage and the grand vision that life would be simpler if storage spent less time worrying about block placement on disks and walking up and down directory trees, and simply treated files like objects in a giant bucket, i.e. a more abstracted, higher level way to deal with storage.

May latest blog post is all about how Object Storage differs from traditional block and file, and also contains a bit of a drill down on some of the leading examples in the market today.

Head over to http://www.vifx.co.nz/blog/he-treats-me-like-an-object for the full post.

Filed under: Cloud, GPFS, Hyperconverged Storage | Leave a comment »

What is object storage and how does it differ from block and file?

Sign up for a free Object Storage seminar – discussion & examples – Tues, 16th Feb, 12-1.30pm, ViFX Auckland. lunch will be provided.

https://www.eventbrite.co.nz/e/object-storage-auckland-registration-19772808001

Filed under: Cloud, storage, VMware | Leave a comment »

The market for IT infrastructure components, including servers and storage, continues to fragment as the few big players of five years ago are augmented by a constant stream of new entrants and maturing niche players, but some things haven’t changed.

It should go without saying that choices in IT infrastructure should be driven by identified requirements. Requirements are informed by IT and business strategy and culture, and it is also perfectly reasonable that requirements are influenced by the personal comfort zones of those tasked with accountability for decisions and service delivery.

I once had a customer tell me that “My IT infrastructure strategy is Sun Microsystems” which was perhaps taking a personal comfort zone and brand loyalty a little too far. His statement told me that he did not really have an IT infrastructure strategy at all since he was being brand-led rather than requirements-driven.

Comfort zones can be important because they send us warning signals that we should assess risks, but I think we all recognise that they should not be used as an excuse to repeat what we have always done just because it worked last time.

I had an astute customer tell me recently that his current very flexible solution had served him well through a wide range of changes and significant growth over the last ten years, but that his next major infrastructure buying decision would probably be a significant departure in technology because he was looking to establish a platform for the next ten years, not the last ten years.

Any major investment opportunity in IT infrastructure is an opportunity to move the needle in terms of value and efficiency. To simply do again what you did last time is an opportunity missed.

Most of us realise that we are all prone to apply our own style of decision-making with its inevitable strengths and weaknesses. Personal decision-making is then layered with the challenges of teams and interaction as all of the points of view come together (hopefully not in a head-on collision). Knowing our strengths and weaknesses and how we interact in teams can help us to make a balanced decision.

Some multi-criteria mathematical theories claim that there is always ultimately a single rational choice, but ironically even mathematicians can’t agree on that. Bernoulli’s expected utility hypothesis for example suggests that there are multiple entirely rational choices depending on simple factors like how risk-averse the decision-makers are. Add to that the effect of past experience (Bayesian inference for the hard core) and mathematics can easily take you in a circle without really making your decision any more objective.

Knowing all of this, it is still useful to layer some structure onto our decision-making to ensure that we are focused on the requirements and on the end goal of essential business value, for example, use of weightings in decision-making has been a relatively common way of trying to introduce some objectivity into proposal evaluations.

Many of you will be familiar with the NIST definition of Cloud as having five essential characteristics which we have previously discussed on this blog. One way to measure the overall generic quality of a cloud offering is to evaluate that offering against the five characteristics, but I am suggesting that we take that one step further and that these essential characteristics can also be applied more broadly to any infrastructure decision as a first pass highlighter of relative merit and essential value.

In client specific engagements, if you were going to measure five qualities, it might make more sense to tailor the characteristics measured to specific client requirements, but as a generic first-pass tool we can simply use these five approximated NIST characteristics:

In pursuit of essential value, the modified NIST essential characteristics can be evaluated to arrive at a “web of essential value” by rating the options from zero to five and plotting them onto a radar diagram.

You still have to do all your own analysis so I don’t think we’re going to be threatening the Forrester Wave or the Gartner Magic Quadrant any time soon. Rather than being a way to present pre-formed analysis and opinion, WEV is a way for you to think about your options with an approach inspired by NIST’s definition of Cloud essential characteristics.

WEV is not intended to be your only tool, but it might be an interesting place to start in your evaluations.

The next time you have an IT infrastructure choice to make, why not start with the Web of Essential Value? I’d be keen to hear if you find it useful. The only other guidance I would offer is not to be too narrow in your interpretation of the five essential characteristics.

I wish you all good decision-making.

Filed under: Cloud | Leave a comment »

I’ve been brushing up on my William Deming recently so I’ve been thinking a lot about change and how change does not always come easily. Markets keep changing and companies as well as people need to learn to adapt.

We can probably all recall brands that were dominant once, but now have faded. Some of the famous brands of my parent’s generation like Jaguar cars, and British Seagull faded quickly in the face of German and Japanese innovation and quality.

One of the most shocking examples is Eastman Kodak. Founded in 1888, they held around 90% of the film market in the United States during the 1970s and went on to invent much of the technology used in digital cameras. But essentially they were a film company and when their own invention overtook them they were not prepared. Kodak eventually emerged from Chapter 11 in 2013 with a very different and much smaller business. Do we say that this was a complete failure of Kodak’s management in the 1970s, or do we say that it was almost inevitable given the size of Kodak and the complete and rapid technological change that occurred?

Even very large, well established companies can cope with rapid technological change if they react appropriately. It is possible to turn a big ship. Two examples from the Information Technology industry, Hewlett Packard (est. 1939) and IBM (est. 1911) have, so far, managed to adapt as their markets undergo huge change. The future is always uncertain and both have suffered major setbacks at times, but both continue to be top tier players in their target markets.

Of those who failed to adapt, another famous example is Firestone. Founded in 1900 they followed Ford into the automobile era, but failed to keep up with the market move to radial tyres in the late 1960s. They then then ran into several years of serious manufacturing quality problems which greatly reduced their brand value. In 1988 they were purchased by Bridgestone of Japan. One interesting thing about Firestone was that they had an unusually homogeneous management team, most of whom lived in Akron Ohio and many of them were born there. In management studies this homogeneity has come under some suspicion as a contributing factor to their reluctance and then inability to innovate. It might be significant if we compare that with the strife that IBM got into in the early 1990s and the board’s decision to appoint the first outsider CEO who subsequently turned the company around at that time, a feat that was at least in part attributed to his lack of emotional attachment to past decisions.

These are big bold examples and we can no doubt find other examples closer to home.

With brands and whole companies, failures to innovate will eventually become obvious, but with projects and IT departments, the consequences of failure to innovate can be less obvious for a time.

So why would anyone choose to avoid innovation and change? I can think of four reasons straight off.

These are all examples of what Edward De Bono would call Black Hat thinking, which is very common in the world of I.T. Black Hat thinking is of course valid as part of a broader consideration, but it is not a substitute for broader consideration.

Perhaps the biggest thematic change in I.T. Infrastructure over the years has been commoditization. My background is largely in storage systems and I see commoditization as having a huge impact. In the past storage vendors have made use of commodity hardware, but integrated it into their products so that the products themselves were not commoditized.

It is no secret among I.T. vendors that manufacturer margins are dramatically higher on storage systems than on servers so new storage solutions based on truly commoditized servers can be expected to have a significant impact.

Not only does hardware commoditization underpin most cloud services like Azure, AWS and vCloud Air, but hardware commoditization is also a driver behind on-premises hyper-converged storage systems like VMware Virtual SAN. With hardware commoditization, the value piece of the pie becomes much more focused on the software function.

But hardware commoditization is only one example of change in our industry. The real issue is one of being willing to take advantage of change.

I started off this post with a reference to William Deming. 70 years ago he put forward his ideas on continuous improvement and those ideas are currently enjoying a new lease of life expressed through ITIL.

Three of the questions Deming said we need to ask ourselves are:

Together the answers to these questions help us to form a strategy.

External IT consultants can be useful in all three of these steps in helping to frame the challenges against a background of cross-pollinated ideas and capabilities from around the market. Consultants can also help you to consider the realistic bite sizes for innovation and the associated risks. But ultimately change and innovation is something that we all have to take responsibility for. And like they sing in Memphis Change Don’t Come Easy.

[a version of this post was originally released at http://www.vifx.co.nz/blog/embracing-cloud-innovation]

Filed under: The Nature of Man | Leave a comment »

I have been watching the multi-site distributed NAS space for some years now. There have been some interesting products including Netapp’s Flexcache which looked nice but never really seemed to get market traction, and similarly IBM Global Active Cloud Engine (Panache) which was released as a feature of SONAS and Storwize V7000 Unified. Microsoft have played on the edge of this field more successfully with DFS Replication although that does not handle locking. Other technologies that encroach on this space are Microsoft Sharepoint and also WAN acceleration technologies like Microsoft Branchcache and Riverbed.

What none of these have been very good at however is solving the problem of distributed collaborative authoring of large complex multi-layered documents with high performance and sturdy locking. For example cross-referenced CAD drawings.

It’s no surprise that the founders of Panzura came from a networking background (Aruba, Alteon) since the issues to be solved are those that are introduced by the network. Panzura is a global file system tuned for CAD files and it’s not unusual to see Panzura sites experience file load times less than one tenth or sometimes even one hundredth of what they were prior to Panzura being deployed.

Rather than just provide efficient file locking however, Panzura has taken the concept to the Cloud, so that while caching appliances can be deployed to each work site, the main data repository can be in Amazon S3 or Azure for example. Panzura now claims to be the only proven global file locking solution that solves cross-site collaboration issues of applications like Revit, AutoCAD, Civil3D, and Bentley MicroStation as well as SOLIDWORKS CAD and Siemens NX PLM applications. The problems of collaboration in these environments are well-known to CAD users.

Panzura has been growing rapidly, with 400% revenue growth in 2013 and they have just come off another record quarter and a record year for 2014. Back in 2013 they decided to focus their energies on the Architectural, Engineering & Construction (so-called AEC) markets since that was where the technology delivered the greatest return on customer investment. In that space they have been growing more than 1000% per year.

ViFX recently successfully supplied Panzura to an international engineering company based in New Zealand. If you have problems with shared CAD file locking, please contact ViFX to see how we can solve the problem using Panzura.

Filed under: Cloud, N Series, SONAS, Storwize V7000 | Leave a comment »

My wife has been complaining that we don’t have enough cupboard space, both in the kitchen, and also for linen. On the weekend we bought a dining room cabinet, and that allowed my wife to reorganize the kitchen cupboards and pantry.

What came to light was that the pantry in particular was so overloaded that it was very difficult to tell what was in there, and as a result we discovered that there were six bottles of cooking oil (three of rice bran oil, three of olive oil), three containers with standard flour, two with high grade flour, two with rice, two with brown sugar, two with white sugar, two with opened packets of malt biscuits, two with opened packets of crackers etc.

More capacity is always nice. My wife’s solution involved spending money on buying additional capacity, and also effort to select and install the cabinet, and hours to sort through the existing cupboards and drawers and pantry to work out what was there and decide where best to put things.

I have however always maintained that the real problem is that we own too much stuff. If the cupboards had been better organised in the first place, we would have owned fewer duplicates, and the odds are we would not have needed the new cabinet. But new capacity is always nice.

I am sure you have realised by now that the parallel with the world of IT Storage did not escape me. If I had to pay for ongoing support on the new cabinet and I knew it was only going to last 5 years, I would have been less keen on the acquisition and would have pushed back harder with the “we own too much stuff” line.

It seems that it’s easier to add more capacity than to ask the hard questions, but that’s not always a wise use of money.

To read more about right-sizing check out http://www.vifx.co.nz/iaas-not-as-is/

Filed under: The Nature of Man | Leave a comment »

Back in 2011 I blogged on buying a new car, entitled the anatomy of a purchase. Well, the transmission on the Jag has given out and I am now the proud owner of a Toyota Mark X.

The anatomy of the purchase was however a little different this time. Over the last 4 years and I found that the official Jaguar service agents (25 Kms away) offered excellent support. 25 Kms is not always a convenient distance however, so I did try using local neighbourhood mechanics for minor things, but quickly realized that they were going to struggle with anything more complicated.

When it came to buying a replacement, the proximity of a fully trained and equipped service agent became my number one priority. There is only one such agency in my neighbourhood, and that is Toyota, so my first decision was that I was going to buy a Toyota.

Coming from a traditional I.T. vendor background my approach to I.T. support has always been that it should be fully contracted 7 x 24, preferably with a 2 hour response time, for anything that business depended on. But something has changed.

The support requirements for software haven’t really changed, but hardware is now a different game. Clustered systems, scale-out systems, web-scale systems, including hyper-converged (server/storage) systems will typically quickly re-protect a system after a node failure, thereby removing the need for panic-level hardware support response. Scale-out systems have a real advantage over standalone servers and dual controller storage systems in this respect.

It has taken me some time to get used to not having 7×24 on-site hardware support, but the message from customers is that next-business-day service or next+1 is a satisfactory hardware support model for clustered mission-critical systems.

Nutanix gold level support for example, offers next-business-day on-site service (after failure confirmation) or next+1 if the call is logged after 3pm so, given a potential day or two delay, it is worth asking the question “What happens if a second node fails?”

If the second node failure occurs after the data from the first node has been re-protected, then there will only be the same impact as if one host had failed. You can continue to lose nodes in a Nutanix cluster provided the failures happen after the short re-protection time, and until you run out of physical space to re-protect the VM’s. (Readers familiar with the IBM XIV distributed cache grid architecture will also recognise this approach to rinse-and-repeat re-protection.)

This is discussed in more detail in a Nutanix blog post by Andre Leibovici.

To find out more about options for scale-out infrastructure, try talking to ViFX.

Filed under: Hyperconverged Storage, Nutanix, VMware, XIV | Leave a comment »

Just a quick blog post today in the run up to Christmas week and I thought I’d briefly summarize some of the things I have been dealing with recently and also touch on the role of the I.T. department as we move boldly into a cloudy world.

We have seen I.T. move through the virtualization phase to deliver greater efficiency and some have moved on to the Cloud phase to deliver more automation, elasticity and metering. Cloud can be private, public, community or hybrid, so Cloud does not necessarily imply an external service provider.

One of the things that has become clear is the need for right-sizing as part of any move to an external provider. External provision has a low base cost and a high metered cost, so you get best value by making sure your allowances for CPU, RAM and disk are a reasonably tight fit with your actual requirements, and relying on service elasticity to expand as needed. The traditional approach of building a lot of advance headroom into everything will cost you dearly. You cannot expect an external provider to deliver “your mess for less” and in fact what you will get if you don’t right size is “your mess for more”.

And it’s not necessarily true that all of your services are best met by the one or two tiers that a single Cloud provider offers. This is where the Hybrid Cloud comes in, and more than that, this is where a Cloud Management Platform (CMP) function comes in.

“Any substantive cloud strategy will ultimately require using multiple cloud services from different providers, and a combination of both internal and external cloud.” Gartner, September 2013, (Hybrid Cloud Is Driving the Shift From Control to Coordination).

A CMP such as VMware’s vRealize Automation, RightScale, or Scalr can actually take you one step further than a simple Hybrid Cloud. A CMP can allow you to right-locate your services in a policy-driven and centrally managed way. This might mean keeping some services in-house, some in an enterprise I.T. focused Cloud with a high level of performance and wrap-around services, and some in a race-to-the-bottom Public Cloud focused primarily on price.

Some organisations are indeed consuming multiple services from multiple providers, but very few are managing this in a co-ordinated policy-driven manner. The kinds of problems than can arise are:

This layer of policy and management has a natural home with the I.T. department, but as an enabler for enterprise-wide in-policy consumption rather than as an obstacle.

With the Service Brokering Capability, I.T. becomes the central point of control, provision, self-service and integration for all IT services regardless of whether they are sourced internally or externally. This allows an organisation to mitigate the risks and take the opportunities associated with Cloud.

I will be enjoying the Christmas break and extending that well into January as is traditional in this part of the world where Christmas coincides with the start of Summer.

Happy holidays to all.

Filed under: Cloud | Leave a comment »

I remember being entertained by Larry Ellison’s Cloud Computing rant back in 2009 in which he pointed out that cloud was really just processors and memory and operating systems and databases and storage and the internet. While Larry was making a valid point, and he also made a point about IT being a fashion-driven industry, the positive goals of Cloud Computing should by now be much clearer to everyone.

I remember being entertained by Larry Ellison’s Cloud Computing rant back in 2009 in which he pointed out that cloud was really just processors and memory and operating systems and databases and storage and the internet. While Larry was making a valid point, and he also made a point about IT being a fashion-driven industry, the positive goals of Cloud Computing should by now be much clearer to everyone.

When we talk about Cloud Computing it’s probably important that we try to work from a common understanding of what Cloud is and what the terms mean, and that’s where NIST comes in.

The National Institute of Standards and Technology (NIST) is an agency of the US Department of Commerce. In 2011, two years after Larry Ellison’s outburst, and after many drafts and years of research and discussion, NIST published their ‘Cloud Computing Definition’ stating:

“The definition is intended to serve as a means for broad comparisons of cloud services and deployment strategies, and to provide a baseline for discussion from what is cloud computing to how to best use cloud computing”.

“When agencies or companies use this definition they have a tool to determine the extent to which the information technology implementations they are considering meet the cloud characteristics and models. This is important because by adopting an authentic cloud, they are more likely to reap the promised benefits of cloud—cost savings, energy savings, rapid deployment and customer empowerment.”

The definition lists the five essential characteristics, the three service models and the four deployment models. I have summarized them in this blog post so as to do my small bit in encouraging the adoption of this definition as widely as possible to give us a common language and measuring stick for assessing the value of Cloud Computing.

Note that NIST has resisted the urge to go on to define additional services such as Backup as a Service (BaaS), Desktop as a Service (DaaS), Disaster Recovery as a Service (DRaaS) etc, arguing that these are already covered in one way or another by the three ‘standard’ service models. This does lead to an interesting situation where one vendor will offer DRaaS or BaaS effectively as an IaaS offering, and another will offer it under more of a SaaS or PaaS model.

The NIST reference architecture also talks about the importance of the brokering function, which allows you to seamlessly deploy across a range of internal and external resources according to the policies you have set (e.g. cost, performance, sovereignty, security).

The NIST definition of Cloud Computing is the one adopted by ViFX and it is the simplest, clearest and best-researched definition of Cloud Computing I have come across.

On 22nd October 2014 NIST published a new document “US Government Cloud Computing Technology Roadmap” in two volumes which identifies ten high priority requirements for Cloud Computing adoption across the five areas of:

The purpose of the document is to provide a cloud roadmap for US Government agencies highlighting ten high priority requirements to ensure that the benefits of cloud computing can be realized. Requirements seven and eight are particular to the US-Government but the others are generally applicable. My interpretation of NIST’s ten requirements is as follows:

These are all worthwhile requirements, and there’s also a loopback here to some of Larry Ellison’s comments. Larry spoke about seeing value in rental arrangements, but also touched on the importance of innovation. NIST is trying to standardize and level the playing field to maximize value for consumers, but history tells us that vendors will try to innovate to differentiate themselves. For example, with the launch of VMware’s vCloud Air we are seeing the dominant player in infrastructure management software today staking its claim to establish itself as the de facto software standard for hybrid cloud. But that is really a topic for another day…

Filed under: Cloud | Leave a comment »

I recall Tom West (Chief Scientist at Data General, and star of Soul of a New Machine) once saying to me when he visited New Zealand that there was an old saying “Hardware lasts three years, Operating Systems last 20 years, but applications can go on forever.”

Over the years I have known many application developers and several development managers, and one thing that they seem to agree on is that it is almost impossible to maintain good code structure inside an app over a period of many years. The pressures of deadlines for features, changes in market, fashion and the way people use applications, the occasional weak programmer, and the occasional weak dev manager, or temporary lapse in discipline due to other pressures all contribute to fragmentation over time. It is generally by this slow attrition that apps end up being full of structural compromises and the occasional corner that is complete spaghetti.

I am sure there are exceptions, and there can be periodic rebuilds that improve things, but rebuilds are expensive.

If I think about the OS layer, I recall Data General rebuilding much of their DG/UX UNIX kernel to make it more structured because they considered the System V code to be pretty loose. Similarly IBM rebuilt UNIX into a more structured AIX kernel around the same time, and Digital UNIX (OSF/1) was also a rebuild based on Mach. Ironically HPUX eventually won out over Digital UNIX after the merger, with HPUX rumoured to be the much less structured product, a choice that I’m told has slowed a lot of ongoing development. Microsoft rebuilt Windows as NT and Apple rebuilt Mac OS to base it on the Mach kernel.

So where am I heading with this?

Well I have discussed this topic with a couple of people in recent times in relation to storage operating systems. If I line up some storage OS’s and their approximate date of original release you’ll see what I mean:

| Netapp Data ONTAP | 1992 | 22 years |

| EMC VNX / CLARiiON | 1993 | 21 years |

| IBM DS8000 (assuming ESS code base) | 1999 | 15 years |

| HP 3PAR | 2002 | 12 years |

| IBM Storwize | 2003 | 11 years |

| IBM XIV / Nextra | 2006 | 8 years |

| Nimble Storage | 2010 | 4 years |

I’m not trying to suggest that this is a line-up in reverse order of quality, and no doubt some vendors might claim rebuilds or superb structural discipline, but knowing what I know about software development, the age of the original code is certainly a point of interest.

With the current market disruption in storage, cost pressures are bound to take their toll on development quality, and the problem is amplified if vendors try to save money by out-sourcing development to non-integrated teams in low-cost countries (e.g. build your GUI in Romania, or your iSCSI module in India).

Filed under: N Series, Nimble, SAN Volume Controller, Storwize V7000, XIV | 8 Comments »

Decoupling storage performance from storage capacity is an interesting concept that has gained extra attention in recent times. Decoupling is predicated on a desire to scale performance when you need performance and to scale capacity when you need capacity, rather than traditional spindle-based scaling delivering both performance and capacity.

Decoupling storage performance from storage capacity is an interesting concept that has gained extra attention in recent times. Decoupling is predicated on a desire to scale performance when you need performance and to scale capacity when you need capacity, rather than traditional spindle-based scaling delivering both performance and capacity.

Also relevant is the idea that today’s legacy disk systems are holding back app performance. For example, VMware apparently claimed that 70% of all app performance support calls were caused by external disk systems.

IT operations have spent the last 10 years trying to keep up with capacity growth, with less focus on performance growth. The advent of flash has however shown that even though you might not have a pressing storage performance problem, if you add flash your whole app environment will generally run faster and that can mean business advantages ranging from better customer experiences to more accurate business decision making.

My favorite example of performance affecting customer experience is from my past dealings with an ISP of whom I was a residential customer. I was talking to a call centre operator who explained to me that ‘the computer was slow’ and that it would take a while to pull up the information I was seeking. We chatted as he slowly navigated the system, and as we waited, one of the things he was keen to chat about was how much he disliked working for that ISP : o

I have previously referenced a mobile phone company in the US who replaced all of their call centre storage with flash, specifically so as to deliver a better customer experience. The challenge with that is cost. The CIO was quoted as saying that the cost to go all flash was not much more per TB than he had paid for tier1 storage in the previous buying cycle (i.e. 3 or maybe 5 years earlier). So effectively he was conceding that he was paying more per TB for tier1 storage now than he was some years ago. Because the environment deployed did not decouple performance from capacity however, that company has almost certainly significantly over-provisioned storage performance, hence the cost per TB being higher than on the last buying cycle.

There are many examples of storage performance improvements leading to better business decisions, most typically in the area of data warehousing. When business intelligence reports have more up to date data in them, and they run more quickly, they are used more often and decisions are more likely to be evidence-based rather than based on intuition. I recall one CIO telling me about a meeting of the executive leadership team of his company some years ago where each exec was asked to write down the name of the company’s largest supplier – and each wrote a different name – illustrating the risk of making decisions based on intuition rather than on evidence/business intelligence.

Of course we have always been able to decouple performance and capacity to some extent, and it was traditionally called tiering. You could run your databases on small fast drives RAID10 and your less demanding storage on larger drives with RAID5 or RAID6. What that didn’t necessarily give you was a lot of flexibility.

Products like IBM’s SAN Volume Controller introduced flexibility to move volumes around between tiers in real-time, and more recently VMware’s Storage vMotion has provided a sub-set of the same functionality.

And then sub-lun tiering (Automatic Data Relocation, Easy Tier, FAST, etc) reduced the need for volume migration as a means of managing performance, by automatically promoting hot chunks to flash, and dropping cooler chunks to slower disks. You could decouple performance from capacity somewhat by choosing your flash to disk ratio appropriately, but you still typically had to be careful with these solutions since the performance of, for example, random writes that do not go to flash would be heavily dependent on the disk spindle count and speed.

So for the most part, decoupling storage performance and capacity in an existing disk system has been about adding flash and trying not to hit internal bottlenecks.

Traditional random I/O performance is therefore a function of:

Nimble Storage uses flash to accelerate random reads, and accelerates writes through compression into sequential 4.5MB stripes (compare this to IBM’s Storwize RtC which compresses into 32K chunks and you can see that what Nimble is doing is a little different).

Nimble performance is therefore primarily a function of

The number of spindles is not quite so important when you’re writing 4.5MB stripes. Nimble systems generally support at least 190 TB nett (if I assume 1.5x compression average, or 254 TB if you expect 2x) from 57 disks and they claim that performance is pretty much decoupled from disk space since you will generally hit the wall on flash and CPU before you hit the wall on sequential writes to disk. Also this kind of decoupling allows you to get good performance and capacity in a very small amount of rack space. Nimble also offers CPU scaling in the form of a scale-out four-way cluster.

Nimble have come closer to decoupling performance and capacity than any other external storage vendor I have seen.

PernixData Flash Virtualization Platform (FVP) is a software solution designed to build a flash read/write cache inside a VMware ESXi cluster, thereby accelerating I/Os without needing to add anything to your external disk system. PernixData argue that it is more cost effective and efficient to add flash into the ESXi hosts than it is to add them into external storage systems. This has something in common with the current trend for converged scale-out server/storage solutions, but PernixData also works with existing external SAN environments.

There is criticism that flash technologies deployed in external storage are too far away from the app to be efficient. I recall Amit Dave (IBM Distinguished Engineer) recounting an analogy of I/O to eating, for which I have created my own version below:

PernixData works by keeping your data closer to the CPU – decoupling performance and capacity by focusing on a server-side caching layer and scaling alongside your compute ESXi cluster. So this is analagous to getting food from your table rather than food from your garden. With PernixData you tend to scale performance as you add more compute nodes, rather than when you add more back-end capacity.

Decoupling as a theoretical concept is surely a good thing – independent scaling in two dimensions – and it is especially nice if it can be done without introducing significant extra cost, complexity or management overhead.

It is however probably also fair to say that many other systems can approximate the effect, albeit with a little more complexity.

———————————————————————————————————-

Disclosures:

Jim Kelly holds PernixPrime accreditation from PernixData and is a certified Nimble Storage Sales Professional. ViFX is a reseller of both Nimble Storage and PernixData.

Filed under: Flash, Nimble, PernixData, VMware | Leave a comment »

The clustered/scale-out storage world keeps getting more and more interesting and for some they would say more and more confusing.

There are too many to list them all here, but here are block diagrams depicting seven interesting storage or converged hardware architectures. See if you can decipher my diagrams and match the labels by choosing between the three sets of options in the multi-choice poll at the bottom of the page:

| A | VMware EVO: RACK |

| B | IBM XIV |

| C | VMware EVO: RAIL |

| D | Nutanix |

| E | Nimble |

| F | IBM GPFS Storage Server (GSS) |

| G | VMware Virtual SAN |

| A | VMware EVO: RACK |

| B | IBM XIV |

| C | VMware EVO: RAIL |

| D | Nutanix |

| E | Nimble |

| F | IBM GPFS Storage Server (GSS) |

| G | VMware Virtual SAN |

Filed under: GPFS, Hyperconverged Storage, Nimble, Nutanix, VMware, XIV | 2 Comments »

This is simply a re-blogging of an interesting discussion by James Knapp at http://www.vifx.co.nz/testing-the-hyper-convergence-waters/ looking at VMware Virtual SAN. Even more interesting than the blog post however is the whitepaper “How hypervisor convergence is reinventing storage for the pay-as-you-grow era” which ViFX has come up with as a contribution to the debate/discussion around Hypervisor storage.

I would recommend going to the first link for a quick read of what James has to say and then downloading the whitepaper from there for a more detailed view of the technology.

Filed under: Hyperconverged Storage, VMware | Leave a comment »

The phrase ‘Software-defined Storage’ (SDS) has quickly become one of the most widely used marketing buzz terms in storage. It seems to have originated from Nicira’s use of the term ‘Software-defined Networking’ and then adopted by VMware when they bought Nicira in 2012, where it evolved to become the ‘Software-defined Data Center’ including ‘Software-defined Storage’. VMware’s VSAN technology therefore has the top of mind position when we are talking about SDS. I really wish they’d called it something other than VSAN though, so as to avoid the clash with the ANSI T.11 VSAN standard developed by Cisco.

I have seen IBM regularly use the term ‘Software-defined Storage’ to refer to:

I recently saw someone at IBM referring to FlashSystem 840 as SDS even though to my mind it is very much a hardware/firmware-defined ultra-low-latency system with a very thin layer of software so as to avoid adding latency.

Interestingly, IBM does not seem to market XIV as SDS, even though it is clearly a software solution running on commodity hardware that has been ‘applianced’ so as to maintain reliability and supportability.

Let’s take a quick look at the contenders:

1. GPFS: GPFS is a file system with a lot of storage features built in or added-on, including de-clustered RAID, policy-based file tiering, snapshots, block replication, support for NAS protocols, WAN caching, continuous data protection, single namespace clustering, HSM integration, TSM backup integration, and even a nice new GUI. GPFS is the current basis for IBM’s NAS products (SONAS and V7000U) as well as the GSS (gpfs storage server) which is currently targeted at HPC markets but I suspect is likely to re-emerge as a more broadly targeted product in 2015. I get the impression that gpfs may well be the basis of IBM’s SDS strategy going forward.

2. Storwize: The Storwize family is derived from IBM’s SAN Volume Controller technology and it has always been a software-defined product, but tightly integrated to hardware so as to control reliability and supportability. In the Storwize V7000U we see the coming together of Storwize and gpfs, and at some point IBM will need to make the call whether to stay with the DS8000-derived RAID that is in Storwize currently, or move to the gpfs-based de-clustered RAID. I’d be very surprised if gpfs hasn’t already won that long-term strategy argument.

3. Virtual Storage Center: The next contender in the great SDS shootout is IBM’s Virtual Storage Center and it’s sub-component Tivoli Storage Productivity Center. Within some parts of IBM, VSC is talked about as the key to SDS. VSC is edition dependent but usually includes the SAN Volume Controller / Storwize code developed by IBM Systems and Technology Group, as well as the TPC and FlashCopy Manager code developed by IBM Software Group, plus some additional TPC analytics and automation. VSC gives you a tremendous amount of functionality to manage a large complex site but it requires real commitment to secure that value. I think of VSC and XIV as the polar opposites of IBM’s storage product line, even though some will suggest you do both. XIV drives out complexity based on a kind of 80/20 rule and VSC is designed to let you manage and automate a complex environment.

Commodity Hardware: Many proponents of SDS will claim that it’s not really SDS unless it runs on pretty much any commodity server. GPFS and VSC qualify by this definition, but Storwize does not, unless you count the fact that SVC nodes are x3650 or x3550 servers. However, we are already seeing the rise of certified VMware VSAN-ready nodes as a way to control reliability and supportability, so perhaps we are heading for a happy medium between the two extremes of a traditional HCL menu and a fully buttoned down appliance.

Product Strategy: While IBM has been pretty clear in defining its focus markets – Cloud, Analytics, Mobile, Social, Security (the ‘CAMSS’ message that is repeatedly referred to inside IBM) I think it has been somewhat less clear in articulating a clear and consistent storage strategy, and I am finding that as the storage market matures, smart people are increasingly wanting to know what the vendors’ strategies are. I say vendors plural because I see the same lack of strategic clarity when I look at EMC and HP for example. That’s not to say the products aren’t good, or the roadmaps are wrong, but just that the long-term strategy is either not well defined or not clearly articulated.

It’s easier for new players and niche players of course, and VMware’s Software-defined Storage strategy, for example, is both well-defined and clearly articulated, which will inevitably make it a baseline for comparison with the strategies of the traditional storage vendors.

A/NZ STG Symposium: For the A/NZ audience, if you want to understand IBM’s SDS product strategy, the 2014 STG Tech Symposium in August is the perfect opportunity. Speakers include Sven Oehme from IBM Research who is deeply involved with gpfs development, Barry Whyte from IBM STG in Hursley who is deeply involved in Storwize development, and Dietmar Noll from IBM in Frankfurt who is deeply involved in the development of Virtual Storage Center.

Filed under: Flash, GPFS, SAN Volume Controller, SONAS, Storwize V7000, Tivoli, VMware, XIV | Leave a comment »

Just a quick post to let readers know that I have resigned from IBM after 14 years with the company and I’m looking forward to starting work at ViFX on Monday 11th August, which it seems also happens to be Steve Wozniak‘s birthday.

I will work out in time what this means for the blog (my move to ViFX, not Steve’s birthday) but it’s pretty likely that I will also start looking at some non-IBM technologies – maybe including such things as VMware, Nutanix, Commvault, Actifio, Violin and Nimble Storage.

And having failed to create any meaningful link whatsoever between my move and the birth of the Woz I will leave it at that… until the 11th : )

Filed under: Actifio, Commvault, Flash, Nimble, Nutanix, Violin, VMware | 1 Comment »

Back in 2012 after IBM announced Real-time Compression (RtC) for Storwize disk systems I covered the technology in a post entitled “Freshly Squeezed“. The challenge with RtC in practice turned out to be that on many workloads it just couldn’t get the CPU resources it needed, and I/O rates were very disappointing, especially in its newly-released un-tuned state,

We quickly learned that lesson and IBM’s Disk Magic was an essential tool to warn us aboout unsuitable workloads. Even in August 2013 when I was asked at the Auckland IBM STG Tech Symposium “Do you recommend RtC for general use?” My answer was “Wait until mid 2014”.

Now that the new V7000 (I’m not sure we’re supposed to call it Gen2, but that works for me) is out, I’m hoping that time has come.

The New V7000: I was really impressed when we announced the new V7000 in May 2014 with it’s 504 disk drives, faster CPUs, 2 x RtC (Intel Coleto Creek comms encryption processor) offload engines per node canister, and extra cache resources (up to 64GB RAM per node canister, of which 36GB is dedicated to RtC) but having been caught out in 2012, I wanted to see what Disk Magic had to say about it before I started recommending it to people. That’s why this post has taken until now to happen – Disk Magic 9.16.0 has just been released.

After a quick look at Disk Magic I almost titled this post “Bigger, Better, Juicier than before” but I felt I should restrain myself a little, and there are still a few devils in the details.

50% Extra: I have been working on the conservative assumption of getting an extra 50% nett space from RtC across an entire disk system if little was known about the data. It is best to run IBM’s Comprestimator so you can get a better picture if you have access to do that however.

Getting an extra 50% is the same as setting Capacity Magic to use 33% compression. Until now I believed that this was a very conservative position, but one thing I really don’t enjoy is setting an expectation and then being unable to deliver on it.

Easy Tier: The one major deficiency in Disk Magic 9.16.0 is that you can’t model Easy Tier and RtC in the same model. That is pretty annoying since on the new V7000 you will almost certainly want both. So unfortunately that means Disk Magic 9.16.0 is still a bit of a waste of time in testing most real-life configurations that include RtC and the real measure will have to wait until the next release due in August 2014.

What you can use 9.16.0 however is to validate the performance of RtC (without Easy Tier) and look at the usage on the new offload engines. What I found was that the load on the RtC engines is still very dependent on the I/O size.

I/O Size: When I am doing general modelling I used to use 16KB as a default size since that is the kind of figure I had generally seen in mixed workload environments, but in more recent times I have gone back to using the default of 4KB since the automatic cache modelling in Disk Magic takes a lot of notice of the I/O size when deciding how random the workload is likely to be. Using 4KB forces Disk Magic to assume that the workload is very random, and that once again builds in some headroom (all part of my under-promise+over-deliver strategy). If you use 16KB, or even 24KB as I have seen in some VMware environments, then Disk Magic will assume there are a lot of sequential I/Os and I’m not entirely comfortable with the huge modeled performance improvement you get from that assumption. (For the same reason these days I tend to model Easy Tier using the ‘Intermediate’ setting rather than the default/recommended ‘High Skew’ setting.)

However, using a small I/O size in your Disk Magic modelling has the exact opposite effect when modelling RtC. RtC runs really well when the I/O size is small, and not so well when the I/O size is large. So my past conservative practice of modelling a small I/O size might not be so conservative when it comes to RtC.

Different Data Types: In the past I have also tended to build Disk Magic models with one server, this is because my testing showed that having several servers or a single server gave the same result. All Disk Magic cared about was the number of I/O requests coming in over a given number of fibres. Now however we might need to take more careful account of data types and focus less on the overall average I/O size and more on the individual workloads and which are suitable for RtC and which are not.

50% Busy: And just as we should all be aware that going over 50% busy on a dual controller system is a recipe for problems should we lose a controller for any reason (and faults are also more likely to happen when the system is being pushed hard) similarly going over 50% busy on your Coleto Creek RtC offload engines would also lead to problems if you lose a controller.

I always recommend that you use all 4 compression engines +extra cache on each dual controller V7000 and now I’m planning to work on the assumption that, yes I can get 1.5:1 compression overall, but that is more likely to come from 50% being without compression and 50% being at 2:1 compression and my Disk Magic models will reflect that. So I still expect to need 66% physical nett to get to 100% target, but I’m now going to treat each model as being made up of at least two pools, one compressed and one not.

Transparent Compression: RtC on the new Gen2 V7000 is a huge improvement over the Gen1 V7000. The hardware has been specifically designed to support it, and remember that it is truly transparent and doesn’t lose compression over time or require any kind of batch processing. That all goes to make it a very nice technology solution that most V7000 buyers should take advantage of.

Filed under: Storwize V7000 | Leave a comment »

Ever since companies like Data General moved RAID control into an external disk sub-system back in the early ’90s it has been standard received knowledge that servers and storage should be separate.

While the capital cost of storage in the server is generally lower than for an external centralised storage subsystem, having storage as part of each server creates fragmentation and higher operational management overhead. Asset life-cycle management is also a consideration – servers typically last 3 years and storage can often be sweated for 5 years since the pace of storage technology change has traditionally been slower than for servers.

When you look at some common storage systems however, what you see is that they do include servers that have been ‘applianced’ i.e. closed off to general apps, so as to ensure reliability and supportability.

At one point the DS8000 was available with LPAR separation into two storage servers (intended to cater to a split production/non-production environment) and there was talk at the time of the possibility of other apps such as TSM being able to be loaded onto an LPAR (a feature that was never released).

Apps or features?: There are a bunch of apps that could be run on storage systems, and in fact many already are, except they are usually called ‘features’ rather than apps. The clearest examples are probably in the NAS world, where TSM and Space Management and SAMBA/CTDB and Ganesha/NFS, and maybe LTFS, for example, could all be treated as features.

I also recall Netapp once talking about a Fujitsu-only implementation of ONTAP that could be run in a VM on a blade server, and EMC has talked up the possibility of running apps on storage.

GPFS: In my last post I illustrated an example of using IBM’s GPFS to construct a server-based shared storage system. The challenge with these kinds of systems is that they put onus onto the installer/administrator to get it right, rather than the traditional storage appliance approach where the vendor pre-constructs the system.

Virtualization: Reliability and supportability are vital, but virtualization does allow the possibility that we could have ring-fenced partitions for core storage functions and still provide server capacity for a range of other data-oriented functions e.g. MapReduce, Hadoop, OpenStack Cinder & Swift, as well as apps like TSM and HSM, and maybe even things like compression, dedup, anti-virus, LTFS etc., but treated not so much as storage system features, but more as genuine apps that you can buy from 3rd parties or write yourself, just as you would with traditional apps on servers.

The question is not so much ‘can this be done’, but more, ‘is it a good thing to do’? Would it be a good thing to open up storage systems and expose the fact that these are truly software-defined systems running on servers, or does that just make support harder and add no real value (apart from providing a new fashion to follow in a fashion-driven industry)? My guess is that there is a gradual path towards a happy medium to be explored here.

Filed under: DS8000, GPFS, SAN Volume Controller, SONAS, Storwize V7000, Tivoli, VMware, XIV | Leave a comment »

GPFS (General Parallel File System) is one of those very cool technologies that you can do so much with that it’s actually fun to design solutions with it (provided you’re the kind of person that also gets a kick from a nice elegant mathematical proof by induction).

Back in 2010 I was asked by an IBM systems software strategist for my opinion as to whether GPFS had potential as a mainstream product, or if it was best kept back as an underlying component in mainstream solutions. I was strongly in the component camp, but now I almost regret that, because it may be that really the only thing that was holding GPFS back was the lack of its own comprehensive GUI. That is something I still hope will be addressed in the not too distant future.

Anyway, this is a sample design that attempts to show some of the things you can do with GPFS by way of building a software defined storage and server environment.

The central box shows GPFS servers (virtualized in this example) and the left and right boxes show GPFS clients. GPFS also supports ILM policies between disk tiers and out to LTFS tape, as well as optional integration with HSM (via Tivoli Space Management) and fast efficient backup with Tivoli Storage Manager.

There are of course a few caveats and restrictions. Check out the GPFS infocenter for the technical details.

This second diagram shows a simpler view of how to build a highly available software defined storage environment. The example shows two physical servers, but you can add many servers and still have a single storage pool. Mirroring is on a per volume basis. Also you could use GPFS native RAID to build a RAID6 array in each server for example.

Filed under: GPFS, Tivoli, VMware | Leave a comment »

If you need scalable NAS and what you’re primarily interested in is capacity scaling, with less emphasis on performance, and more on cost-effective entry price, then you might be interested in building a scale-out NAS from Storwize V7000 Unified systems, especially if you have some block I/O requirements to go with your NAS requirements.

There are three ways that I can think of doing this and each has its strengths. The documentation on these options is not always easy to find, so these diagrams and bullets might help to show what is possible.

One key point that is not well understood is that clustering V7000 systems to a V7000U allows SMB2 access to all of the capacity – a feature that you don’t get if you virtualize secondary systems rather than cluster them.

And of course systems management is made relatively simple with the Storwize GUI.

Filed under: SONAS, Storwize V7000 | 2 Comments »

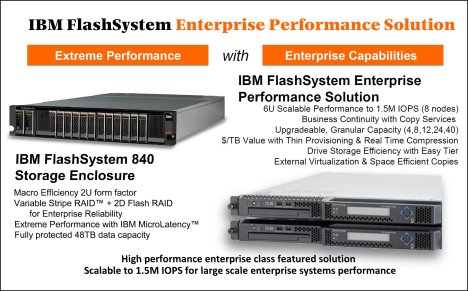

IBM’s Flash strategy is a two-pronged approach, targeting the two segments that IDC labels as:

Last week I outlined the new FlashSystem 840 and focused mainly on the Absolute Performance aspect. Absolute Performance for IBM means latencies down around 95 microseconds write and 135 microseconds read, whereas most Flash storage systems in the market are talking 500+ microseconds best case. I’m guessing that in the new world of I/O bound applications, having 3 or 4 times the latency overhead could be a real problem for those vendors at some stage.

This week however I’d like to focus on the Enterprise Flash market segment.

When we and IDC talk about Enterprise we are more concerned with the software stack and how it is used to address issues of:

The short answer to all of these is IBM’s SAN Volume Controller. Folks who are not very familiar with SVC often assume that SVC adds latency to storage. In the case of spinning disk systems, my experience has been that SVC reduces latency (due to intelligent caching effects) but takes about 5% of the top of maximum native IOPS. In the real world that means that things will almost always go faster with SVC than without it.

In the case of Flash, the picture is slightly different. The latencies of the FlashSystem 840 are so low that SVC caching does not fully compensate for other effects and the nett is that putting SVC in front of your FlashSystem 840 is likely to add around 100 micro-seconds of latency.

Yes that’s right, only 100 micro-seconds. I should add that I have not personally verified this, but have been told that is what we are seeing in IBM’s internal lab tests.

When you add 100 micro-seconds to the low latency of the FlashSystem 840 (95 microseconds write, 135 microseconds read) you still have numbers down below 250 microseconds, which is twice as fast as the numbers quoted on products like XtremIO and Violin 6200.

Even way back in 2008 we announced a benchmark result of 1 million IOPS with SVC and Flash, code-named Quicksilver. At the time the IBM statement said that IBM was planning a complete end-to-end systems approach to Flash and…

“Performance improvements of this magnitude can have profound implications for business, allowing two to three times the work to [be completed] in a given time frame for . . . time-sensitive applications like reservations systems, and financial program trading systems, and creating opportunity for entirely new insights in information-warehouses and analytics solutions”So this is not new for IBM. The recently announced FlashSystem Solution with SVC is the culmination of six years of preparation (including SVC tuning) by IBM.

So you can understand now why IBM does not need to reinvent a whole separate scale-out offering of the sort that Whiptail Invicta (Cisco’s new EMC killer) and XtremIO Cluster (EMC’s new fat-boy SSD system) have tried to create. IBM can deliver a much more mature and feature-rich solution with consistent management and feature functions right across the board from the small V3700 with Easy Tier Flash right through to high-end SVC Flash Solutions like the one implemented by Sprint in 2013.

SVC brings proven data center credentials to scale-out Flash, delivering the full Storwize software stack while adding as little as 100 microseconds of latency. That is a good story and one that will not be easily matched by any competitor, and if the market would prefer something that is more tightly coupled from a hardware point of view then I don’t see why IBM couldn’t also deliver that in future if it wanted to.

So IBM has avoided the need to reinvent, develop, or buy-in a new immature scale-out mechanism for Flash. By using SVC you get FlashCopy snapshots and clones, as well as volume replication over IP, and Real-time Compression. But possibly most important of all is the full SVC interoperability matrix. How’s that for a software defined storage strategy that delivers rapid time-to-value in exactly the way it’s meant to.

For more info you can check out the IBM FlashSystem product page and the IBM Redbook Solution Guide “Implementing FlashSystem 840 with SAN Volume Controller”

Filed under: Flash, SAN Volume Controller | 3 Comments »

Flash storage is at an interesting place and it’s worth taking the time to understand IBM’s new FlashSystem 840 and how it might be useful.

A traditional approach to flash is to treat it like a fast disk drive with a SAS interface, and assume that a faster version of traditional systems are the way of the future. This is not a bad idea, and with auto-tiering technologies this kind of approach was mastered by the big vendors some time ago, and can be seen for example in IBM’s Storwize family and DS8000, and as a cache layer in the XIV. Using auto-tiering we can perhaps expect large quantities of storage to deliver latencies around 5 millseconds, rather than a more traditional 10 ms or higher (e.g. MS Exchange’s jetstress test only fails when you get to 20 ms).

Some players want to use all SSDs in their disk systems, which you can do with Storwize for example, but this is again really just a variation on a fairly traditional approach and you’re generally looking at storage latencies down around one or two millseconds. That sounds pretty good compared to 10 ms, but there are ways to do better and I suspect that SSD-based systems will not be where it’s at in 5 years time.

The IBM FlashSystem 840 is a little different and it uses flash chips, not SSDs. It’s primary purpose is to be very very low latency. We’re talking as low as 90 microseconds write, and 135 microseconds read. This is not a traditional system with a soup-to-nuts software stack. FlashSystem has a new Storwize GUI, but it is stripped back to keep it simple and to avoid anything that would impact latency.

This extreme low latency is a unique IBM proposition, since it turns out that even when other vendors use MLC flash chips instead of SSDs, by their own admission they generally still end up with latency close to 1 ms, presumably because of their controller and code-path overheads.

| Nett of 2-D RAID5 | 4 modules | 8 modules | 12 modules |

| 2GB modules | 4 TB | 12 TB | 20 TB |

| 4GB modules | 8 TB | 24 TB | 40 TB |

I’m thinking that prime targets for these systems include Databases and VDI, but also folks looking to future-proof their general performance. If you’re making a 5 year purchase, not everyone will want to buy a ‘mature’ SSD legacy-style flash solution, when they could instead buy into a disk-free architecture of the future.

But, as mentioned, FlashSystem does not have a full traditional software stack, so let’s consider the options if you need some of that stuff:

Right now, FlashSystem 840 is mainly about screamingly low latency and high performance, with some reasonable data center class credentials, and all at a pretty good price. If you have a data warehouse, or a database that wants that kind of I/O performance, or a VDI implementation that you want to de-risk, or a general workload that you want to future-proof, then maybe you should talk to IBM about FlashSystem 840.

Meanwhile I suggest you check out these docs:

Filed under: DS8000, Flash, SAN Volume Controller, Storwize V7000, Tivoli, VMware, XIV | 2 Comments »

Tip: When running production-style workloads alongside Global Mirror continuous replication secondary volumes on one Storwize system, best practice is to put the production and DR workloads into separate pools. This is especially important when the production workloads are write intensive.

Aside from write-intensive OLTP, OLAP etc, large file copies (e.g. zipping a 10GB flat file database export) can be the biggest hogs of write resource (cache and disk), especially where the backend disk is not write optimised (e.g. RAID6).

|

Pools on your system |

Max % of write cache any one pool can use |

|

1 |

100% |

|

2 |

66% |

|

3 |

40% |

|

4 |

30% |

|

5 |

25% |

Not only is the RAID1 option much lower cost, but it is also ~10% faster. I’m not 100% sure we want to encourage folks to use 7200RPM drives at the Global Mirror target side, but the point I’m making is that RAID6 is not really ideal in a 100% write environment. Of course using Easy Tier (SSD assist) can help enormously [added 29th April 2014] in some situations, but not really with Global Mirror targets since the copy grain size is 256KiB and Easy Tier will ignore everything over 64KiB.

Filed under: SAN Volume Controller, Storwize V7000 | 2 Comments »

So following my recent blog post on SANSlide WAN optimization appliances for use with Storwize replication, IBM has just announced Storwize 7.2 (available December) which includes not only replication natively over IP networks (licensed as Global Mirror/Metro Mirror) but also has SANslide WAN optimization built-in for free. i.e. to get the benefits of WAN optimization you no longer need to purchase Riverbed or Cisco WAAS or SANSlide appliances.

Admittedly, Global Mirror was a little behind the times in getting to a native IP implementation, but having got there, the developers obviously decided they wanted to do it in style and take the lead in this space, by offering a more optimized WAN replication experience than any of our competitors.

The industry problem with TCP/IP latency is the time it takes to acknowledge that your packets have arrived at the other end. You can’t send the next set of packets until you get that acknowledgement back. So on a high latency network you end up spending a lot of your time waiting, which means you can’t take proper advantage of the available bandwidth. Effective bandwidth usage can sometimes be reduced to only 20% of the actual bandwidth you are paying for.

The first time I heard this story was actually back in the mid-90’s from a telco network engineer. His presentation was entitled something like “How latency can steal your bandwidth”.

SANSlide mitigates latency by virtualising the pipe with many connections. While one connection is waiting for the ACK another is sending data. Using many connections, the pipe can often be filled more than 95%.

If you have existing FCIP routers you don’t need to rush out and switch over to IP replication with SANSlide, especially if your latency is reasonably low, but if you do have a high latency network it would be worth discussing your options with your local IBM Storwize expert. It might depend on the sophistication of your installed FCIP routers. Brocade for example suggests that the IBM SAN06B-R is pretty good at WAN optimization. So the graph below does not necessarily apply to all FCIP routers.

When you next compare long distance IBM Storwize replication to our competitors’ offerings, you might want to ask them to include the cost of WAN optimization appliances to get a full apples for apples comparison, or you might want to take into account that with IBM Storwize you will probably need a lot less bandwidth to achieve the same RPO.

Even when others do include products like Riverbed appliances with their offerings, SANSlide still has the advantage of being completely data-agnostic, so it doesn’t get confused or slow down when transmitting encrypted or compressed data like most other WAN optimization appliances do.

Free embedded SANSlide is only one of the cool new things in the IBM Storwize world. The folks in Hursley have been very busy. Check out Barry Whyte’s blog entry and the IBM Storwize product page if you haven’t done so already.

Filed under: SAN Volume Controller, Storwize V7000 | 11 Comments »

WAN optimization is not something that storage vendors traditionally put into their storage controllers. Storage replication traffic has to fend for itself out in the WAN world, and replication performance will usually suffer unless there are specific WAN optimization devices installed in the network.

For example, Netapp recommends Cisco WAAS as:

“an application acceleration and WAN optimization solution that allows storage managers to dramatically improve NetApp SnapMirror performance over the WAN.”

…because:

“…the rated throughput of high-bandwidth links cannot be fully utilized due to TCP behavior under conditions of high latency and high packet loss.”

EMC similarly endorses a range of WAN optimization products including those from Riverbed and Silver Peak.